About this Project

This project is initiated, run, and maintained by KnowledgeMiner Software, a research, consulting and software development company in the field of high-end predictive modeling.

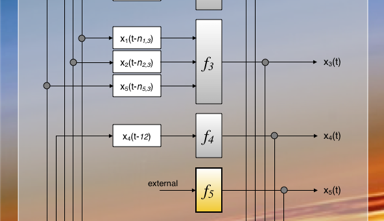

The objective is doing modeling and prediction of global temperature anomalies through self-organizing knowledge extraction using public data. It predicts temperatures of nine latitudinal bands 36 months ahead, which is a rather short-term forecast horizon for climate. In the future, the project may extend to quaterly and yearly projections to also cover medium-term goals. It is open to every interested person. The results will be published, updated, and archived on this site on a regular basis as new data are available, and former predictions and trends can be compared with actual data.

Although we use the terms Climate Change and Global Warming as well known and well established names for the problem, it is important to note that this project is impartial and no public, private or corporate funding is involved. It is entirely independent, transparent, and open in results. Moreover, the independence and impartiality of this project is substantially driven and warranted by the way the prediction models are developed: autonomously by self-organizing knowledge extraction from observed data.

Why this Project?

Mathematical modelling is at the core of many decision support systems. Like many problems in economics, ecology, biology, biochemistry, sociology, and life sciences, the earth climate system is ill-defined and can be characterized by:

-

‣insufficient a priori information about the system for adequately describing the inherent system relationships,

-

‣possessing a large number of variables, many of which are unknown and/or cannot be measured,

-

‣noisy data available in very small to very large data sets,

-

‣vague and fuzzy objects whose variables has to be described adequately.

Common to all modelling problems this means to:

-

‣apply a systematic, holistic approach to modelling,

-

‣take into account the inadequacy of a priori information about the real-world system,

-

‣describe the vagueness and uncertainties of variables and, consequently, uncertainty of results and

-

‣handle very small to very large sets of noisy data.

For ill-defined systems the classical hard approach that is based on the assumption that the world can be understood objectively and that knowledge about the world can be validated through empirical means needs to be replaced by a soft systems paradigm which can better describe vagueness and imprecision. This approach is based on the observation that humans only have an incomplete and rather vague understanding of the nature of the world but nevertheless are able to solve unexpected problems in uncertain situations.

Systems can be modelled through deductive logical-mathematical methods (theory-driven approach) or by inductive modelling methods (data-driven approach). Deductive methods have been used to advantages in cases of well-understood problems and that obey well-known principles (microscopic approach). The spectacular results in aerospace are prime examples of this approach. Here, the theory of the object being modelled is well known and obeys known physical laws.

In contrast, inductive methods are used when macroscopic models (sometimes termed black box models) are the only alternative. These models are derived from real physical data and represent the relationships implicit within the system without knowledge of the physical processes or mechanisms involved.

There is in real world a vast treasure trove of data that is being continuously amassed that contains useful information about the behaviour of systems. This is priceless information, which only needs to be trawled and suitably mined so as to transform it into useful knowledge that will expose the causal relationships between the principal variables. Theory-driven approaches to modelling are unduly restrictive to this end because of insufficient a priori knowledge, complexity and the uncertainty of the objects, as well as the exploding time and computing demands.

The models, predictions, and results of this project are therefore entirely based on parallel implementations of data-driven, self-organizing, high-dimensional knowledge extraction technologies implemented in our KnowledgeMiner software. We invite interested persons to participate in this ongoing project to get more knowledge about the development of the earth climate out of the huge amount of observed data available also at NASA.

We hope this project will provide a useful, transparent, and objective contribution to short- to medium-term climate modeling and prediction efforts.

Frank Lemke

KnowledgeMiner Software

Acknowledgment

A special thanks to Julian Miller who helped promoting the software behind this project from the beginning and to Anna Stathaki, Robert E. King, Volodymir Stepashko, and Pavel Kordik for their thoughts, ideas, and contribution to this project.

The idea

The world around us is getting more complex, more interdependent, more connected and global. We can observe it, but we cannot understand it because of its complexity and the myriad interactions that are impossible to know let alone foresee. Uncertainty and vagueness, coupled with rapid developments radically affect humanity. Though we observe these effects, we most often do not understand the consequences of any actions, the dynamics involved and the inter-dependencies of real-world systems in which system variables are dynamically related to many others, and where it is usually difficult to differentiate which are the causes and which are the effects.

We are facing these complex problems, which do need decision-making, but the means – the models – for understanding, predicting, simulating, and where possible controlling such systems are simply missing increasingly. This is more and more the situation with many real-world problems.

© 2010-2013 KnowledgeMiner Software

| contact |